Echo Cancellation Methods

What is the difference between acoustic echo cancellation and line echo cancellation?

Acoustic echo cancellation and line echo cancellation are two different methods used to eliminate echo in audio systems. Acoustic echo cancellation is specifically designed to remove echoes that occur in acoustic environments, such as during phone calls or video conferences. On the other hand, line echo cancellation is focused on eliminating echoes that result from the transmission of signals over telecommunication lines. While both techniques aim to improve audio quality by reducing echo, they target different types of echo sources.

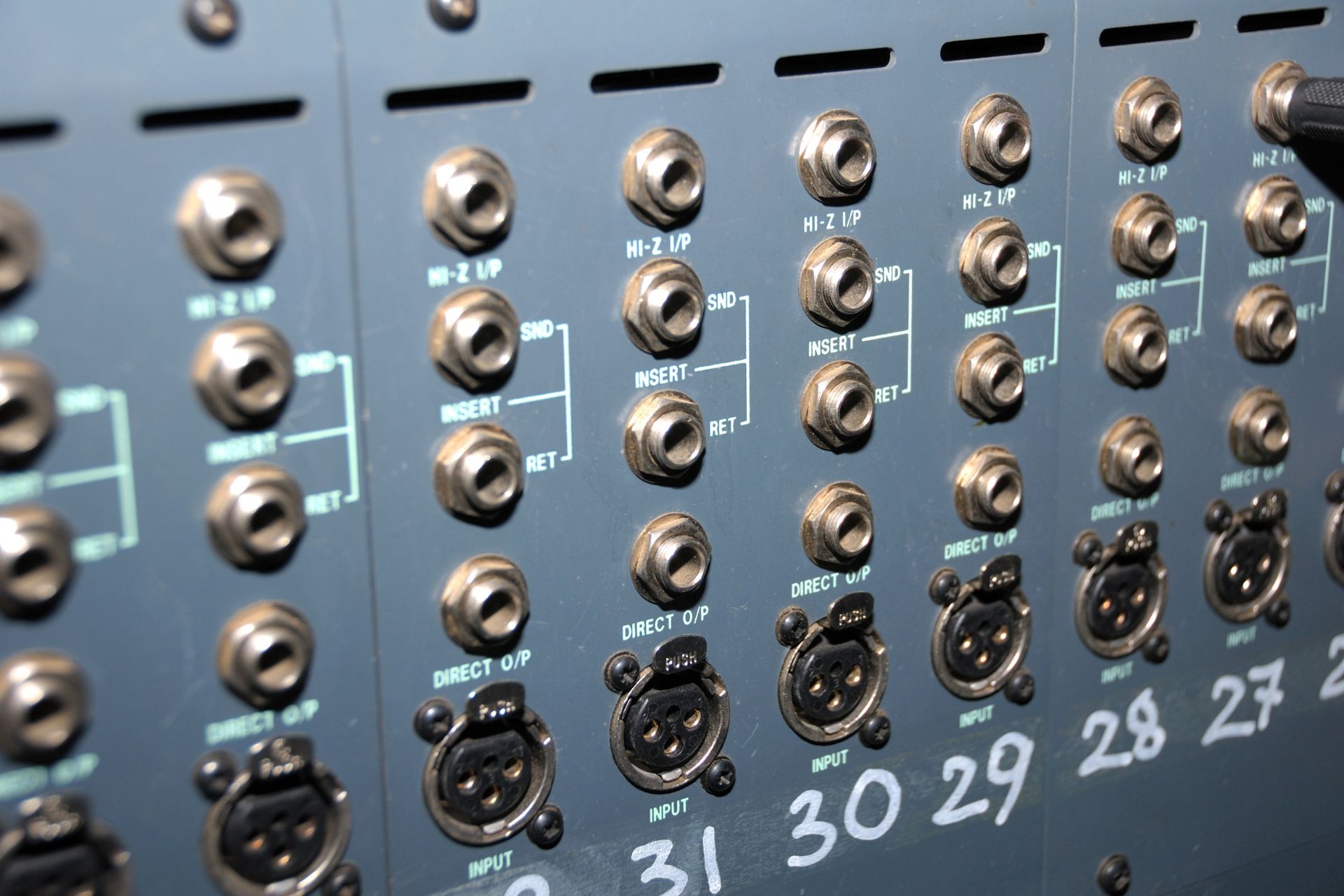

Digital Signal Processing Techniques for Noise Reduction Used By Pro Audio and Video Engineers